Products and Features

- Getting Started with CloudRaya Container Registry

- How to use Sudo on a CloudRaya Linux VM

- Keeping Your CloudRaya Linux VMs Up-to-Date

- Maximizing StorageRaya with Essential Practices

- Assign Multiple IP Addresses to Virtual Machine

- Generating a CloudRaya API key

- Simplify CloudRaya Management with API

- Deploying a Virtual Machine on CloudRaya

- Deploying a Kubernetes Cluster on KubeRaya

- Using StorageRaya – CloudRaya S3 Object Storage

- Opening Ping Access on Cloud Raya VM Public IP

- Maximize Your Storage Raya Access Speed with Content Delivery Network (CDN)

- How to Create Project Tag in Cloud Raya for More Organized VM Billing Report

- Exporting Cloud Raya VM to outer Cloud Raya's Infrastructure using Acronis Cyber Protect

- SSO Management on Cloud Raya

- Easy Steps to Enable VPC in Cloud Raya

- Using the SSH key Feature in Cloud Raya Dashboard

- Cloud Raya Load Balancer, Solution to Distribute Load Equally

- Create your own VPN server with DNS-Level AdBlocker using PiVPN

- Fix Broken LetsEncrypt SSL Certificate due to Expired Root CA Certificate

- How to Make a Snapshot and Configure VM Backup in Cloud Raya

- How to Request Services or Licenses Products

- Adding, Attaching, and Resize Root Storage Disk in Cloud Raya VPS

- Managing your DNS Zone with DNS Bucket in Cloud Raya

- Create VM, Custom Package, Reinstall VM, and Adjusting Security Profile

- How to backup Linux VM via Acronis in Cloud Raya

- How to Backup Desktop Linux and Windows via Acronis in Cloud Raya

- Backing-Up Cloud Raya Windows VM Using Acronis Cyber Protect

- Load Balancing in Cloud Raya

- Establishing a VPN in Cloud Raya

- Generating an API Token

- Deploying a Virtual Machine in Cloud Raya

- Show Remaining Articles ( 17 ) Collapse Articles

- How to backup Linux VM via Acronis in Cloud Raya

- How to Backup Desktop Linux and Windows via Acronis in Cloud Raya

- Maximizing StorageRaya with Essential Practices

- Using StorageRaya – CloudRaya S3 Object Storage

- Building a Static Website Using Storage Raya S3 Bucket

- Integrating S3 Storage Raya and Strapi for Asset Storage Optimization – Part 4

- Maximize Your Storage Raya Access Speed with Content Delivery Network (CDN)

- Managing Storage Raya from various tools and from various OS

- Binding NextCloud with CloudRaya S3 Object Storage as External Storage Mount

- How to use Sudo on a CloudRaya Linux VM

- Keeping Your CloudRaya Linux VMs Up-to-Date

- Implement Multi-Factor Authentication on CloudRaya Linux VM

- Assign Multiple IP Addresses to Virtual Machine

- Deploying a Virtual Machine on CloudRaya

- Configurating cPanel Using Ubuntu 20.04 on CloudRaya – Part 2

- Deploying cPanel Using Ubuntu 20.04 on CloudRaya - Part 1

- Exporting Cloud Raya VM to outer Cloud Raya's Infrastructure using Acronis Cyber Protect

- Using the SSH key Feature in Cloud Raya Dashboard

- Adding, Attaching, and Resize Root Storage Disk in Cloud Raya VPS

- Create VM, Custom Package, Reinstall VM, and Adjusting Security Profile

- How to backup Linux VM via Acronis in Cloud Raya

- Backing-Up Cloud Raya Windows VM Using Acronis Cyber Protect

- Deploying a Virtual Machine in Cloud Raya

Integration

- Implement Multi-Factor Authentication on CloudRaya Linux VM

- Accessing KubeRaya Cluster Using the Kubernetes Dashboard

- Building a Static Website Using Storage Raya S3 Bucket

- Integrating S3 Storage Raya and Strapi for Asset Storage Optimization – Part 4

- Integrating Strapi Content to Frontend React - Part 3

- Content Management with Strapi Headless CMS - Part 2

- Strapi Headless CMS Installation in CloudRaya - Part. 1

- Using SSH Key on CloudRaya VM with PuTTY

- Installing Multiple PHP Versions in One VM for More Flexible Web Development

- Replatforming Apps to K8s with RKE and GitLab CI

- OpenAI API Integration: Completions in PHP

- Building an Email Server on CloudRaya Using iRedMail

- Improving Email Delivery with Sendinblue SMTP Relay

- Building a Self Hosted Password Manager Using Passbolt

- How to Install Podman on Almalinux/Rocky Linux 9

- ElkarBackup: GUI Based backup Tools based on Rsync and Rsnapshot

- Improving Webserver Performance with SSL Termination on NGINX Load Balancer

- Using NGINX as an HTTP Load Balancer

- Automating Task with Cronjob

- Upgrade Zimbra and the OS Version

- Deploy Mailu on Rancher Kubernetes

- Export and Import Database in MySQL or MariaDB Using Mysqldump

- Backup & Sync Local and Remote Directories Using RSYNC

- Managing Storage Raya from various tools and from various OS

- Binding NextCloud with CloudRaya S3 Object Storage as External Storage Mount

- Simple monitoring and alerting with Monit on Ubuntu 22.04 LTS

- VS Code on your browser! How to install code-server on a VM

- Implementing Redis HA and Auto-Failover on Cloud Raya

- Using XFCE Desktop Environment on Cloud Raya VM

- Installing Python 3.7-3.9 on Ubuntu 22.04 Jammy LTS using PPA

- Implementing Continuous Integration with Gitlab CI and Continuous Delivery with Rancher Fleet

- Using Collabora Online on Cloud Raya NextCloud's VM

- Installing NextCloud in Cloud Raya- Detail Steps from the Beginning to the Very End

- Set Up High Availability PostgreSQL Cluster Using Patroni on Cloud Raya

- Set Up WAF KEMP in Cloud Raya Part 2

- Set Up WAF KEMP in Cloud Raya Part 1

- Using the SSH key Feature in Cloud Raya Dashboard

- Monitor Your Services Uptime Using Uptime Kuma

- Hosting Static Website with Hugo on Cloud Raya

- Kubernetes Ingress Controller using SSL in CloudRaya

- Reverse Proxy management using Nginx Proxy Manager

- Create your own VPN server with DNS-Level AdBlocker using PiVPN

- How to deploy Portainer on Linux to easily manage your docker containers

- High Availability Kubernetes Using RKE in Cloud Raya Part 3

- High Availability Kubernetes Using RKE in Cloud Raya Part 2

- High Availability Kubernetes Using RKE in Cloud Raya Part 1

- How to backup Linux VM via Acronis in Cloud Raya

- How to Backup Desktop Linux and Windows via Acronis in Cloud Raya

- Deploying Magento on Cloud Raya

- How to Install Nextcloud on Cloud Raya

- How to Install CWP in Cloud Raya

- How to Install Node.js and Launch Your First Node App

- How to install and secure MariaDB on Ubuntu 18.04 and 20.04 on Cloud Raya

- How to Install and Securing MongoDB on Ubuntu 18.04 and 20.04

- Classes: Post Installation on Ansible

- Classes: Install and Configure Ansible

- Classes: Introduction to Ansible for a robust Configuration Management

- How to Setup Active Directory Domain Service & DNS with Cloud Raya

- How to Host Your Own Docker Hub in Cloud Raya

- How to Setup Your Own Laravel with Nginx in Ubuntu 18.04

- How to Deploy Container in Cloud Raya using Docker

- Securing CentOS with iptables

- Install and Configure Squid Proxy in Ubuntu

- Installing Apache and Tomcat: A Quick Way

- Securing Ubuntu with UFW

- Install a Node.js and Launch a Node App on Ubuntu 18.04

- Installing LAMP in Ubuntu

- Installing LEMP Stack on Ubuntu 18.04

- Show Remaining Articles ( 53 ) Collapse Articles

- Articles coming soon

- Implement Multi-Factor Authentication on CloudRaya Linux VM

- Configurating cPanel Using Ubuntu 20.04 on CloudRaya – Part 2

- Deploying cPanel Using Ubuntu 20.04 on CloudRaya - Part 1

- Integrating S3 Storage Raya and Strapi for Asset Storage Optimization – Part 4

- Integrating Strapi Content to Frontend React - Part 3

- Content Management with Strapi Headless CMS - Part 2

- Strapi Headless CMS Installation in CloudRaya - Part. 1

- Using SSH Key on CloudRaya VM with PuTTY

- Building an Email Server on CloudRaya Using iRedMail

- Improving Email Delivery with Sendinblue SMTP Relay

- Building a Self Hosted Password Manager Using Passbolt

- ElkarBackup: GUI Based backup Tools based on Rsync and Rsnapshot

- Improving Webserver Performance with SSL Termination on NGINX Load Balancer

- Using NGINX as an HTTP Load Balancer

- Upgrade Zimbra and the OS Version

- Deploy Mailu on Rancher Kubernetes

- Managing Storage Raya from various tools and from various OS

- Binding NextCloud with CloudRaya S3 Object Storage as External Storage Mount

- Simple monitoring and alerting with Monit on Ubuntu 22.04 LTS

- VS Code on your browser! How to install code-server on a VM

- Implementing Redis HA and Auto-Failover on Cloud Raya

- Using XFCE Desktop Environment on Cloud Raya VM

- Implementing Continuous Integration with Gitlab CI and Continuous Delivery with Rancher Fleet

- Using Collabora Online on Cloud Raya NextCloud's VM

- Installing NextCloud in Cloud Raya- Detail Steps from the Beginning to the Very End

- Set Up WAF KEMP in Cloud Raya Part 2

- Set Up WAF KEMP in Cloud Raya Part 1

- Monitor Your Services Uptime Using Uptime Kuma

- Create your own VPN server with DNS-Level AdBlocker using PiVPN

- How to deploy Portainer on Linux to easily manage your docker containers

- High Availability Kubernetes Using RKE in Cloud Raya Part 3

- High Availability Kubernetes Using RKE in Cloud Raya Part 2

- High Availability Kubernetes Using RKE in Cloud Raya Part 1

- How to Install Nextcloud on Cloud Raya

- Classes: Post Installation on Ansible

- Classes: Install and Configure Ansible

- Classes: Introduction to Ansible for a robust Configuration Management

- Connect Windows Active Directory on Cloud Raya with Azure AD

- How to Host Your Own Docker Hub in Cloud Raya

- How to Deploy Container in Cloud Raya using Docker

- Show Remaining Articles ( 25 ) Collapse Articles

- Accessing KubeRaya Cluster Using the Kubernetes Dashboard

- Integrating S3 Storage Raya and Strapi for Asset Storage Optimization – Part 4

- Integrating Strapi Content to Frontend React - Part 3

- Content Management with Strapi Headless CMS - Part 2

- Strapi Headless CMS Installation in CloudRaya - Part. 1

- Creating Interactive Chatbot with OpenAI API in PHP

- Installing Multiple PHP Versions in One VM for More Flexible Web Development

- OpenAI API Integration: Completions in PHP

- Improving Webserver Performance with SSL Termination on NGINX Load Balancer

- Using NGINX as an HTTP Load Balancer

- Automating Task with Cronjob

- How to Deploy Django App on Cloud Raya VM Using Gunicorn, Supervisor, and Nginx

- How to Install Node.js and Launch Your First Node App

- How to Setup Your Own Laravel with Nginx in Ubuntu 18.04

- Install a Node.js and Launch a Node App on Ubuntu 18.04

- How to use Sudo on a CloudRaya Linux VM

- Keeping Your CloudRaya Linux VMs Up-to-Date

- Implement Multi-Factor Authentication on CloudRaya Linux VM

- Using SSH Key on CloudRaya VM with PuTTY

- Building a Self Hosted Password Manager Using Passbolt

- Improving Webserver Performance with SSL Termination on NGINX Load Balancer

- Export and Import Database in MySQL or MariaDB Using Mysqldump

- Backup & Sync Local and Remote Directories Using RSYNC

- How to Deploy Django App on Cloud Raya VM Using Gunicorn, Supervisor, and Nginx

- Set Up WAF KEMP in Cloud Raya Part 2

- Set Up WAF KEMP in Cloud Raya Part 1

- Using the SSH key Feature in Cloud Raya Dashboard

- How to backup Linux VM via Acronis in Cloud Raya

- How to Backup Desktop Linux and Windows via Acronis in Cloud Raya

- Securing CentOS with iptables

- Securing Ubuntu with UFW

- Show Remaining Articles ( 1 ) Collapse Articles

- Configurating cPanel Using Ubuntu 20.04 on CloudRaya – Part 2

- Deploying cPanel Using Ubuntu 20.04 on CloudRaya - Part 1

- Integrating S3 Storage Raya and Strapi for Asset Storage Optimization – Part 4

- Integrating Strapi Content to Frontend React - Part 3

- Content Management with Strapi Headless CMS - Part 2

- Strapi Headless CMS Installation in CloudRaya - Part. 1

- Creating Interactive Chatbot with OpenAI API in PHP

- Installing Multiple PHP Versions in One VM for More Flexible Web Development

- Building an Email Server on CloudRaya Using iRedMail

- Building a Self Hosted Password Manager Using Passbolt

- Improving Webserver Performance with SSL Termination on NGINX Load Balancer

- Using NGINX as an HTTP Load Balancer

- Installing Python 3.7-3.9 on Ubuntu 22.04 Jammy LTS using PPA

- Reverse Proxy management using Nginx Proxy Manager

- Install and Configure Squid Proxy in Ubuntu

- Installing Apache and Tomcat: A Quick Way

- Installing LAMP in Ubuntu

- Installing LEMP Stack on Ubuntu 18.04

- Show Remaining Articles ( 3 ) Collapse Articles

- Building a Static Website Using Storage Raya S3 Bucket

- Integrating S3 Storage Raya and Strapi for Asset Storage Optimization – Part 4

- Integrating Strapi Content to Frontend React - Part 3

- Content Management with Strapi Headless CMS - Part 2

- Strapi Headless CMS Installation in CloudRaya - Part. 1

- Creating Interactive Chatbot with OpenAI API in PHP

- Installing Multiple PHP Versions in One VM for More Flexible Web Development

- OpenAI API Integration: Completions in PHP

- Hosting Static Website with Hugo on Cloud Raya

- Deploying Magento on Cloud Raya

- How to Install CWP in Cloud Raya

- How to Setup Active Directory Domain Service & DNS with Cloud Raya

- Articles coming soon

OpenAI API Integration: Completions in PHP

Lately, ChatGPT has become a hot topic among developers and IT enthusiasts. Its ability to duplicate human dialogue is astonishing and attracts the attention of many.

In the heat of the rising popularity of AI such as ChatGPT, it is time for you to take this opportunity and have your own AI in your application.

Today, we will discuss about integrating OpenAI API with Completions models in your website project that uses PHP language inside CloudRaya’s VM. This will allow you to create a Help Center using the latest technology from OpenAI GPT (generative pre-trained transformer).

Register an OpenAI Account

First step you need to take is to register an account in OpenAI.

Access https://platform.openai.com and follow the signup procedure.

After completing a few verifications, your account is now active. Here is what the OpenAI home panel looks like once you are inside.

Create OpenAI API Key

Now, you can start creating the API Key for OpenAI.

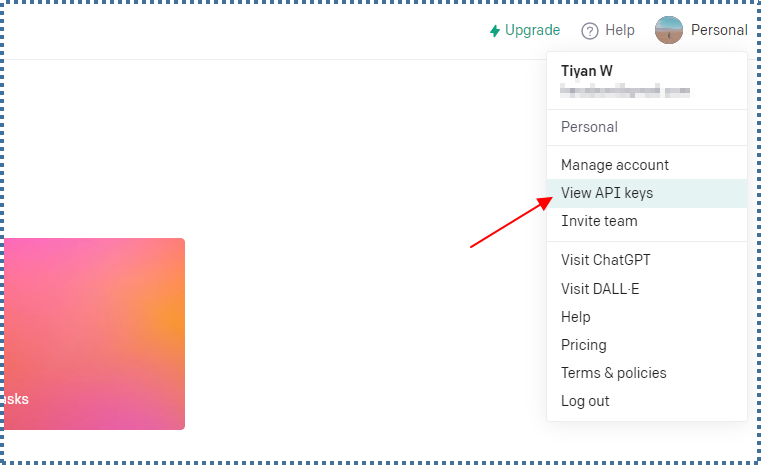

Click your account thumbnail at the top-right side of your screen and choose View API keys

On the API Keys page, you can see previous API keys you have made. These API keys will function as authenticators in our PHP project.

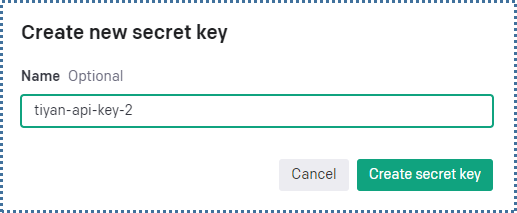

If you have not created any, you can click the + Create new secret key

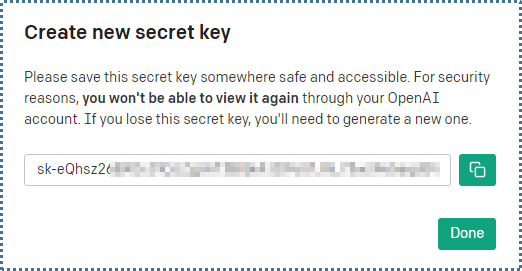

Enter the name of the key and, in a moment, the API key will be generated. Make sure you save this key because it will not be displayed for a second time.

Balance and Credit Verification

Every request of our API will cost us the credit balance in our OpenAI account. For those who registered for the first time, you will receive a free trial usage credit for a limited time.

At this point, you need to make sure you have an available credit balance in your account.

To check your balance, you can access the Usage page.

In this tutorial account, I will use the free trial credits given by OpenAI with an expiry date of July 1st, 2023.

Please note that, API service for ChatGPT Plus have separate cost.

You can open the following page to know more about OpenAI price model.

ChatGPT plus subscription only covers https://chat.openai.com/ use and cost up to $20/month.

GPT-based Languange Model

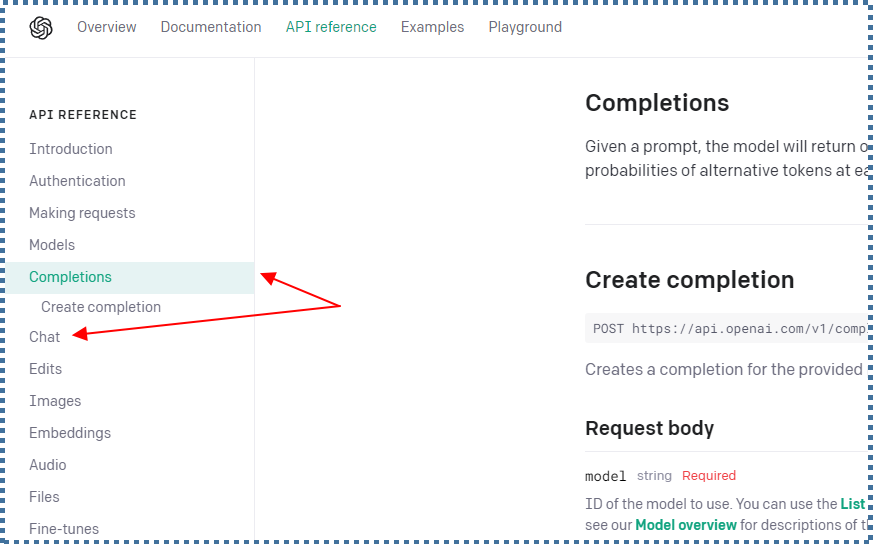

According to the documentation reference for OpenAI API, there are a few language models it can use, namely the Completions model and the Chats model.

Completions allow for the model to create an output based on the previously inserted prompt.

Meanwhile, Chat allows users to directly engage with the model. The model will respond to every message and answer it sequentially as if the user is talking to a human. This model is useful if you want to develop a more natural discussion feeling from the AI.

Every model has different API Endpoint.

This tutorial will delve deeper into Completions model.

About Prompt Engineering

Within the Completion model, there is an important parameter called prompt. What does this prompt do?

Prompt is a text or instruction which you give to the AI at the beginning. This instruction will govern the bot on how to create a response.

By creating the appropriate prompt, we can have better responses given by the AI. A good prompt is to have clear and detailed instructions. As an example, we can take a look at multiple scenarios that are possible to do provided by OpenAI in their Example Page.

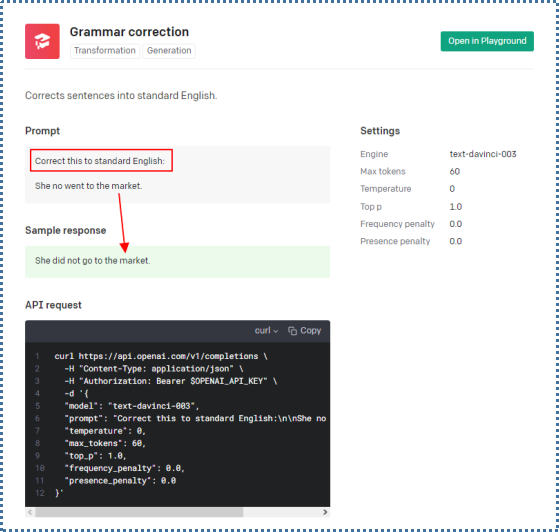

Let’s use Grammar Correction as our example scenario.

First, we declare the prompt at the beginning, which in this case will correct the grammar of the inputted text to be in accordance with proper English grammar. Thus, the response generated by the AI is in the form of corrected English grammar.

There are many other scenarios you can do other than those displayed on the example page, as long as the given prompt is clear and detailed.

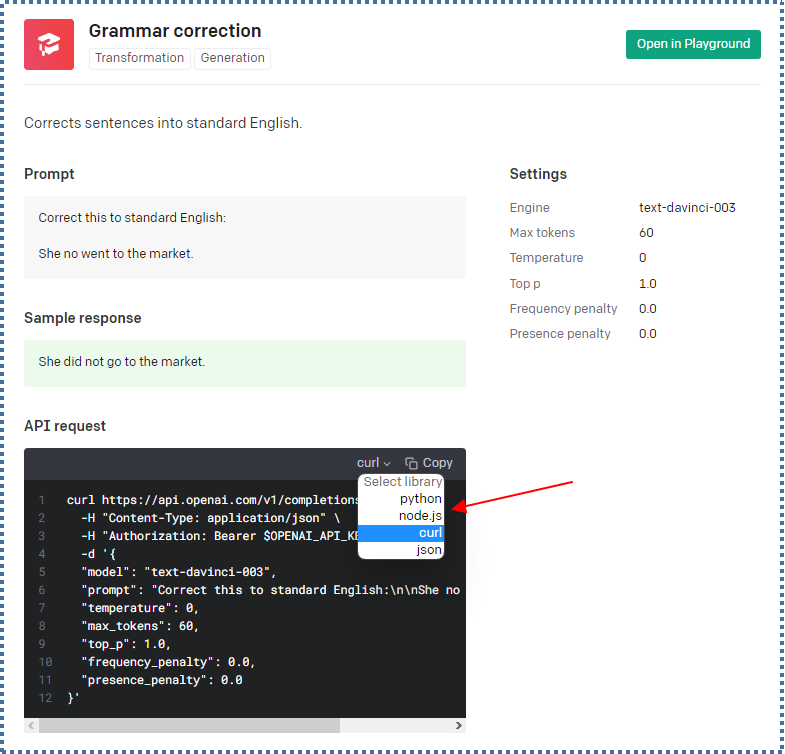

API Integration Request With PHP

Based on the dialogue box above, OpenAI API is currently limited to only Python, Node JS, cURL, and JSON. To use it with PHP, we can utilize cURL syntax so that it can send HTTP requests from cURL to PHP.

We require several cURL functions, such as curl_init(), curl_setopt(), curl_exec(), etc. These functions will allow us to govern the request options, such as URL, header, content, method, and other necessary data.

You can refer to the code below for the result of the conversion and modification::

<?php

error_reporting(E_ALL);

ini_set('display_errors', 1);

$openaiApiKey = "YOUR_API_KEY";

$data = array(

"model" => "text-davinci-003",

"prompt" => "Correct this to standard English:\n\nShe no went to the market.",

"temperature" => 0,

"max_tokens" => 60,

"top_p" => 1,

"frequency_penalty" => 0.0,

"presence_penalty" => 0.0

);

$curl = curl_init();

curl_setopt_array($curl, array(

CURLOPT_URL => "https://api.openai.com/v1/completions",

CURLOPT_RETURNTRANSFER => true,

CURLOPT_ENCODING => "",

CURLOPT_MAXREDIRS => 10,

CURLOPT_TIMEOUT => 30,

CURLOPT_HTTP_VERSION => CURL_HTTP_VERSION_1_1,

CURLOPT_CUSTOMREQUEST => "POST",

CURLOPT_POSTFIELDS => json_encode($data),

CURLOPT_HTTPHEADER => array(

"Authorization: Bearer " . $openaiApiKey,

"Content-Type: application/json"

),

));

$response = curl_exec($curl);

curl_close($curl);

$result = json_decode($response, true);

$content = $result['choices'][0]['text'];

echo $content;

?>For Completions, we will use the Endpoint https://api.openai.com/v1/completions.

Syntax error_reporting(E_ALL) and ini_set('display_errors', 1) are used to govern the reporting and notification of errors in PHP. These syntaxes will make any PHP error to be displayed in our browser. It is very useful for monitoring error, such as when there is API problems or insufficient credit usage.

The result from the API request syntax will looks like the following in your browser.

She did not go to the market.Now, you have your very own AI chat. The next step to do is to integrate the chat with a better and more interesting interface suitable for your application.

▶️ Key Parameter

Before the next step, make sure that you understand the following key parameters governing the result generated by the model.

⏭️ YOUR_OPENAI_API_KEY

This parameter is the API key necessary to authenticate and authorize access to the OpenAI API service.

⏭️ model

This parameter governs the model used in generating the conversation.

For Completions, the best model today is “text-davinci-003“. You can find out more on the reference page to see the list of other models based on their endpoint types.

⏭️ prompt

Write the instructions on how the model is used. To generate the best response, make sure to configure the prompt clearly and in detail.

⏭️ temperature

By configuring the temperature, we can influence the level of randomness and variation of the response generated by the model. High temperature creates more variation and creative response, while low temperature creates a conservative and predictable response. The value used to govern the parameter is between 0,1 to 1,0.

Scenario: Flight Ticket Reservation to Bali

Question: “Hello, I want to reserve a flight ticket to Bali.”

Low Temperature (0.2):

- Chatbot: “Sure, I can help you with that. Please provide me with the date and departure details.”

- The model create a conservative and to the point response.

High Temperature (0.8):

- Chatbot: “Hi! Welcome to the flight ticket reservation service. Bali is a captivating tropical island for you to explore. Now, provide me with your date and departure details, and I will do my flight magic for you!”

- The model create a creative response that increase the holiday experience for the user.

⏭️ max_tokens

max_tokens parameter governs the maximum token used in the model response. The higher the limit, the longer the response will be. Please note, that each token generated will consume your credit usage. So, please pay attention to the value of the parameter.

⏭️ top_p

top_p parameter governs how far the highest probability token is considered in generating the result. It will affect the variation and content of the response generated. The lower the parameter is, the more conservative and predictable the response generated will be. The higher the parameter is, the more variation the response will be.

Skenario: Tourist spot recommendation in Bali.

Pertanyaan: “What are the best destinations in Bali?”

top_p Low (0.2):

- Chatbot: “The best tourist destination in Bali is Kuta Beach, Sanur Beach, and Ubud. These spots offer captivating natural beauty and a rich cultural experience.”

- The model generate a more conservative and predicted response.

top_p High (0.8):

- Chatbot: “It can be hard to find the best tourist destination in Bali! Kuta Beach offers a captivating view, while Ubud offers a combination of nature and art. If you want an adventure, exploring Nusa Penida with its magnificent cliff is an option. Or go to Lovina instead and enjoy the calming sunset. Be sure to choose the destination that suits your interest and preference!”

- The model provides a more variative and creative response as its recommendation.

⏭️ frequency_penalty

frequency_penalty governs how far the model repeats words that often appear in a conversation.

This penalty reduces the probability of common words appearing in the response, thus creating a more unique instead.

Skenario: Favorite hobby.

frequency_penalty

- User: “What is your favorite hobby?”

- Chatbot: “My favorite hobby is playing games.”

- User: “Interesting. Are there any other hobbies other than playing games?”

- Chatbot: “My favorite hobby is playing games.”

frequency_penalty High (0.8):

- User: “What is your favorite hobby?”

- Chatbot: “My favorite hobby is playing games.”

- User: “Interesting. Are there any other hobbies other than playing games?”

- Chatbot: “My favorite hobby is playing games. I also like to spend my time with my friends and family.”

⏭️ presence_penalty

presence_penalty governs how far the model will generate words already present in the current input.

This penalty reduces the probability of words which present previously in the input text to create better and non-repetitive responses.

Scenario: Favorite hobby.

presence Low (0.2):_penalty

- User: “What is your favorite hobby?”

- Chatbot: “My favorite hobby is playing games.”

- The model repeat “favorite hobbiy” which is present in the current input.

presence_penalty High (0.8):

- User: “What is your favorite hobby?”

- Chatbot: “I like to do many things. One of them is playing games.”

- The model generate words not yet present in the current input.

Note: Both frequency_penalty and presence_penalty are meant to increase the diversity of the result however, they work in different ways. frequency_penalty avoids word repetition, while presence_penalty avoids generating words already present in the current input.

Access the following documentation to find out more about the parameters.

Or, you can directly simulate the parameters by accessing the playground page.

Please note, that the use of the playground will cost you your balance.

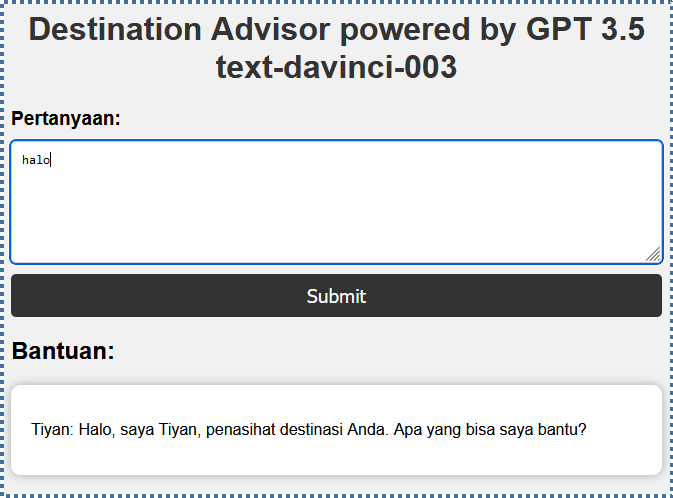

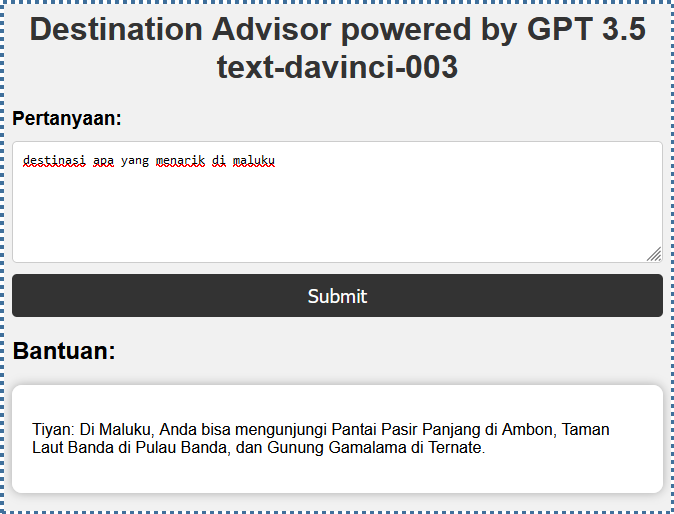

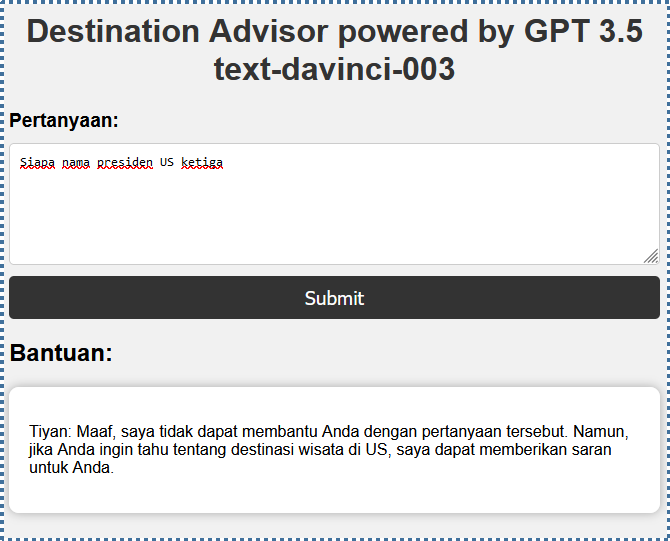

Result of Implementation

Now that we understand the function of the main parameter and the request framework of OpenAI API, it’s time to implement it into an application.

In this tutorial, I have created a simple PHP application in CloudRaya’s VM. It more or less works as a Trip Advisor, which will give the user a suggestion of an interesting spot to visit.

Within the prompt, I have declared the conditions and limitations for the bot to work around.

Conclusion

This conclude our tutorial to integrate OpenAI API with Completions model for website project based on PHP in CloudRaya’s VM.

Applying such artificial intelligence in your website can increase the interaction and experience of the website user.

Find out more about other interesting tutorial in CloudRaya Knowledge Base page or visit us at our YouTube channel to find it in video form.